November 19, 2012

David Roodman and Julia Clark.

[Note: A follow-up post presents an index and ranking based on the indicators below.]

If you’re a policy researcher, you’ve probably received a request to nominate or rank think tanks for the 2012 Global Go To Think Tank Index (GGTTT). This index, compiled each year by the Think Tanks and Civil Societies Program led by James McGann at the University of Pennsylvania, is the most comprehensive effort to evaluate think tank performance.

In 2011, some 1,500 people nominated more than 5,300 tanks for the GGTTT. Nearly 800 advisers then served on 30 virtual panels that ranked the tanks by region and field in such categories as Best Use of the Media, Greatest Impact on Public Policy, and Best Supporting Actress.

OK, not Best Supporting Actress. But the jest is just. Like the Oscars, Go To Think Tank envelopes go to the institutions that get the most votes, and those votes are based on subjective criteria inscrutably embedded in the heads of the voters. As a result, the meaning of the results is often unclear. It’s not immediately obvious why, for example, the Council on Foreign Relations topped the list of Tanks with the Best Use of the Internet or Social Media to Engage the Public. And being unsure about what CFR did right makes it hard to learn from the institution, or even be sure that it is the right role model. (Enrique Mendizabal, Goran Buldioski, and Christian Seiler and Klaus Wohlrabe have said similar things. Also see David's commentary in 2009.)

Having cast ballots in the Think Tank Oscars, one of us (David) can report that the criterion on which he relied most heavily as he picked a list of tanks for each category was which ones he’d actually heard of. Since a lot of people have heard of the Brookings Institution, we are not surprised that it regularly comes out on top.

Not to knock the éminence grise across the street from us, but all this made us wonder: might there be a more meaningful—dare we say, objective—way to rank tanks? We do not aspire to develop a think tank index, for we lack independence of our subject. But existing efforts seem to leave room for improvement. In general, one can assess think tanks in various ways: gather metrics of their web presence; survey their peers (as the GGTTT essentially does); have a committee review in-depth descriptions of a small number of tanks, as Prospect Magazine does for its think tank award. We expect that the best approach would blend several of these strategies.

As it happens, the instructions to GGTTT panelists encourage them to factor into their qualitative judgments such quantifiable characteristics as:

[Updated Nov 27: NBER citation number corrected from 0. Updated Nov 28: All three Peterson domains included.]

Some big methodological questions have arisen:

[Updated Nov 27: NBER citation number corrected from 0. Updated Nov 28: All three Peterson domains included.]

Some big methodological questions have arisen:

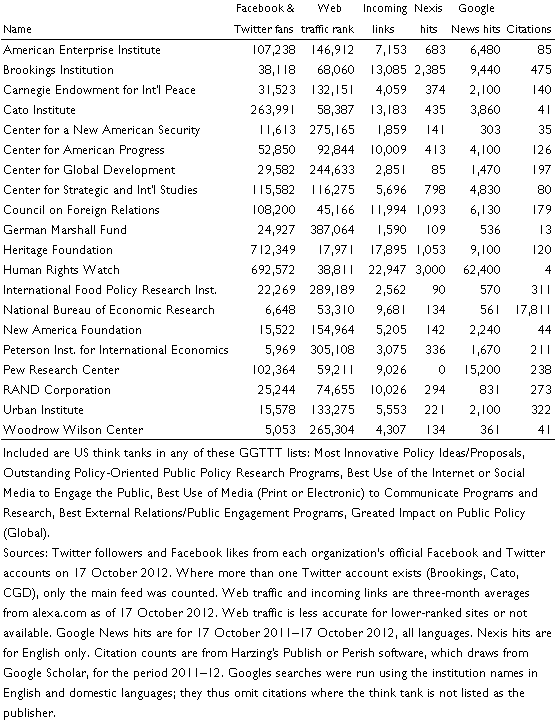

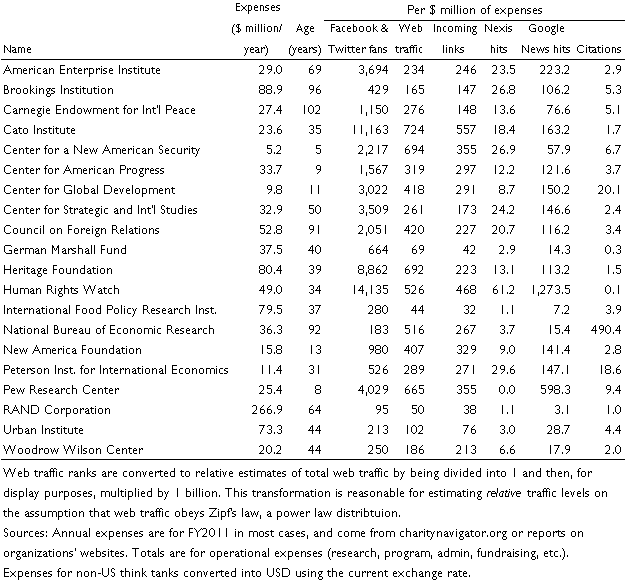

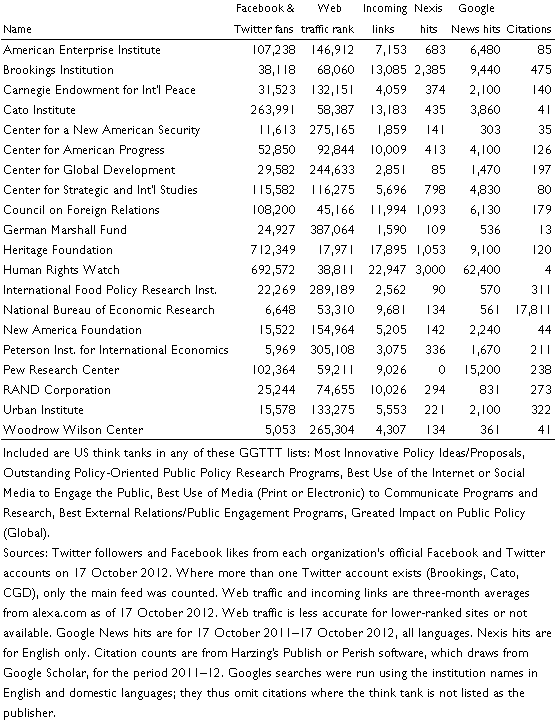

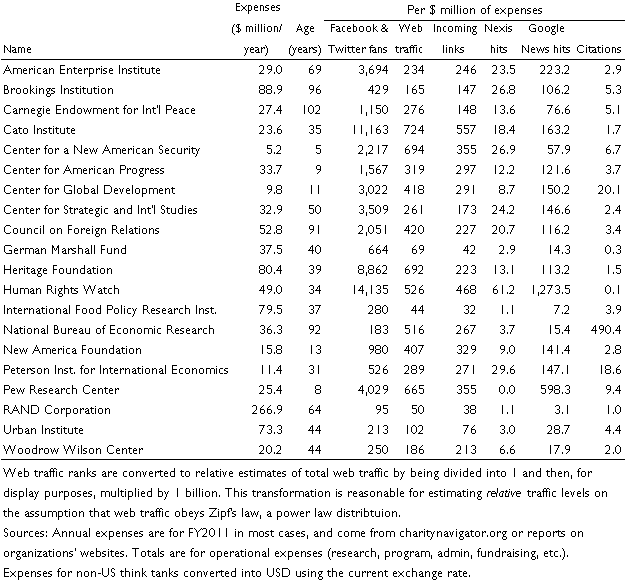

If these things matter, why not measure them directly? Here we take a stab at doing that, perhaps offering an example for a more independent group wanting to blend different kinds of information into a fuller index. Like other quantitative assessments (Posen, Trimbath, FAIR, DCLinktank), our data focus on the public profile of think tanks—media and academic citations, web links, popularity in social media. Compared to those previous assessments, we measure more dimensions of profile. That’s thanks in part to the continuing diversification of the media environment (think Facebook) and the evolution of tools to analyze it, such as Altmetrics. But like them, our measures tend to favor institutions such as CGD that strive to build a high public profile. As David noted in 2009, some tanks succeed by staying out of the limelight, focusing on small but powerful audiences such as legislators. Our experience with building policy indexes such as the Commitment to Development Index makes us keenly aware of the limitations of any such exercise. Think tank profile is not think tank impact. Fundamentally, success is hard to quantify because think tanks aim to shift the thinking of communities. But the operative question is not whether we can achieve perfection. It is whether the status quo can be improved upon. Seemingly, it can be: it only took us a few weeks to choose metrics and gather data, and thus produce additional, meaningful information. A first stab For this initial foray, we focused on the 20 American think tanks that appear on any of the "special achievement" lists of the 2011 GGTTT. The GGTTT also groups tanks by global region, specialty, and affiliation (government, university, political party, independent). We found that choosing our group the way we did produced a list of institutions that are reasonably appropriate to compare, and for which data were easily available. For U.S. institutions, for example, budget data can be obtained from publicly available IRS filings. (In the spreadsheet, we also run numbers for a motley crew of 23 think tanks around the world under GGTTT's International Development heading.) The two tables below display six indicators that look promising, being relevant and practical. The first table shows the data we collected. You might say it measures touches per tank or the "influence profile." (In most columns a higher number is better. But for web traffic rank, smaller is better: if Google were on the list, it would get a 1.) The second table divides those figures by each institution’s annual spending in order to gauge efficiency, what we might call touches per dollar or the adjusted influence profile. What’s next? We’d love to hear your criticism and ideas. With that feedback, and after some time to reflect, we may refine the metrics. We'd prefer for you to make your comments public (below) in order to influence anyone who takes the baton from us. For more of our own thoughts, keep reading after the tables.

- Publication of the organization’s work in peer reviewed journals, books and other authoritative publications

- Academic reputation (formal accreditation, citation of think tank; publications by scholars in major academic books, journals, conferences, and in other professional publications);

- Media reputation (number of media appearances, interviews, and citations);

- Who to include: For this exercise, we’ve limited the list American tanks on GGTTT’s "special achievement" lists, but more could be added. Furthermore, the definition of a think tank isn’t cut and dry (see Enrique Mendizabal’s post). Should we only include organizations whose primary purpose is research (i.e., unlike the Friedrich Ebert and Konrad Adenauer foundations, which are primarily grant-making institutions)? What about independence from government, political parties and educational institutions? One option is to follow Posen’s 2002 analysis, which included only independent institutions (excluding RAND) with permanent staff (excluding NBER).

- Unit of analysis: For now, we’ve been looking at data for the think thanks themselves. A more complete picture might also include stats on expert staff. But this is no easy task, and it begs further questions (as Posen also noted). Should think tank performance be based on the institutions themselves, or on the sum of their parts? What about visiting or associated fellows? What about co-authorship, multiple affiliations and timelines (people move)?

- Time period: The current data vary in time period: social media is a current snapshot, media and scholarly citations are aggregates from 2011–12, and web stats are the average of a three-month period. Ideally, the time period would be standardized, and we would be able to look at multiple years (e.g., a five-year rolling average).

- Quality: The analysis currently includes no indicators of quality, which is often subjective and hard to quantify. When research is normative, ideology also gets in the way. Who produces better quality material, the Center for American Progress or the Cato Institute? (Survey says: depends on your political orientation.) It’s tempting to try and proxy quality by assigning different values to different types of outputs, e.g. weighting peer-reviewed articles more than blog posts because they are “higher quality.” But assessing publication importance (like JIF) doesn’t work in academia and it would be even more inappropriate for policy-oriented researchers and analysts. Think tank outputs are most used by policymakers who need accessible, concise information quickly. They don’t want to pay for or wade through scholarly journals. Not only that, but recent studies suggest the importance of blogs for research dissemination and influence. The NEPC offers reviews of think tank accuracy, but not with the coverage or format that this project would need.

- Output metrics are currently publications listed in Google Scholar and tweets, but we would ideally be able to count other products, like public events, conferences, blogs and other measures of outreach. But this requires a lot of manual searching and would be difficult to update.

- Social media is an important component of this analysis, particularly for its potential to capture the impact of smaller organizations. We’d like more data from more sites (e.g., YouTube), and a way to track trends over time. Twitter Counter and Facebook Insights provide some analytics, but only to account holders.

- Web traffic data is currently from Alexa.com, one of the more reliable free analytics sites. However, what we are able to access are estimations of traffic and links (which are less accurate for less-trafficked sites). Precise site numbers are accessible only to page owners. Maybe we can all share?

- Staff size would be a useful addition to better calculate organizational efficiency. This data has to be individually collected; not an easy task, particularly since many think tanks (especially the larger ones) don’t list their full staff on the website.

- Social mention has promise for tracking social media presence, but might have difficulty filtering out self-promotion as it doesn’t appear to differentiate between mentions by staff and non-staff.

- Google PageRank—Google’s algorithm for measuring links into a webpage—is another option for measuring a site’s importance or reputation. However, third party sites (like this) only give a standardized version of the real PageRank that doesn’t contain complete information and offers little variation: nearly all tanks’ websites had PageRanks of 6--8 on a scale of 10.

- Google Trends is another handy tool. It graphs the frequency of searches for a particular term (e.g., “think tanks”) since 2004, including a map of regional interest. Unfortunately, this data is only available for widely-searched terms; not including many of the smaller think tanks we looked at.

- Google scholar user profiles would be extremely helpful for determining academic impact of individual scholars—if they were more readily used. Even Brookings (one of the world’s largest and oldest tanks) only has five associated scholar pages.

- h-indexes and g-indexes are another alternative to scholarly citation counting. According to many, these metrics provide a more robust picture of how much an academic’s work is valued by her peers.

- ImpactStory is a new open-source webapp for individuals/organizations to monitor impact via altmetrics (read more about it here). It can even import info from your Google Scholar profile! However, not immediately useful for ranking think tanks (each paper for each organization would have to be entered by hand!).

Disclaimer

CGD blog posts reflect the views of the authors, drawing on prior research and experience in their areas of expertise. CGD is a nonpartisan, independent organization and does not take institutional positions.