An article of faith among development economists is that “evidence-based policy” holds the promise of faster progress. Trying out programs on a pilot basis, evaluating them rigorously—ideally through random assignment trials (RCTs)—and scaling up those that prove most cost-effective will make public spending more productive and improve development outcomes.

I recently set out to find a rigorously evaluated pilot whose evidence had led to a program at scale. It wasn’t easy. Our journals are replete with papers on rigorously evaluated small-scale programs that produced positive results—not too surprising given publication bias. But there are very few papers on programs taken to scale.

In many cases, the transition from pilot evaluation to program at scale also means a transition in implementation from a carefully selected NGO overseen by a team of academics to a much less nimble government bureaucracy. Justin Sandefur and Tessa Bold are, to my knowledge, the first to examine this issue directly by comparing the impacts of a program in Kenya that showed positive results in a randomized pilot with results at larger scale using two different modes of implementation: one managed by an NGO and the other by the education ministry. They found that an identical program of adding a contract teacher to a school (rather than a civil service teacher) had positive impacts on student test scores when managed by an NGO, but zero impact when managed by the Ministry.

Given this context, two new papers out of Kenya by Benjamin Piper and a large team of co-authors are really encouraging. Both papers grow out of the influential work of USAID and RTI (Research Triangle Institute) International promoting measurement of early grade reading across the developing world, and especially in Africa, and transparent sharing of results.

The Kenyan government deserves kudos too. Not every country is willing to spend several years evaluating alternative program elements to identify which combination is truly most cost-effective. Nor do governments always allow researchers to monitor implementation at all levels of the system when a program is taken to scale. But since 2012, when less than 5 percent of first- and second-grade children met the government’s literacy benchmarks and 80 percent of teachers reported no professional development support the prior year, the ministry has completely overhauled the way reading and math are taught in the first two grades, based on evaluation evidence.

Identifying what works to improve reading outcomes

The first paper by Piper and co-authors evaluated the pilot “Primary Mathematics and Reading (PRIMR)” program, with initial funding from USAID. What is innovative is the systematic way the government, with support from DfID specifically for this study, tested (and costed) three different strategies for improving reading outcomes, to identify which elements were most cost-effective and possible complementarities among them.

-

Strategy 1: Teacher training (10 days per year for 100 percent of teachers) and reinforcement visits from coaches based on the existing curriculum and reading materials, at a cost of $5.63 per pupil.

-

Strategy 2: The same amount of training and coaching but with different content— organized around a strategy for teaching reading and new set of textbooks based on the latest research on literacy. The new books were provided to students on a 1:1 ratio at an additional cost of $2.38 per student.

-

Strategy 3: The same amount of training and coaching time and the same new textbooks but with guides for teachers matched to the new textbooks, at an additional cost of $0.16 per pupil.

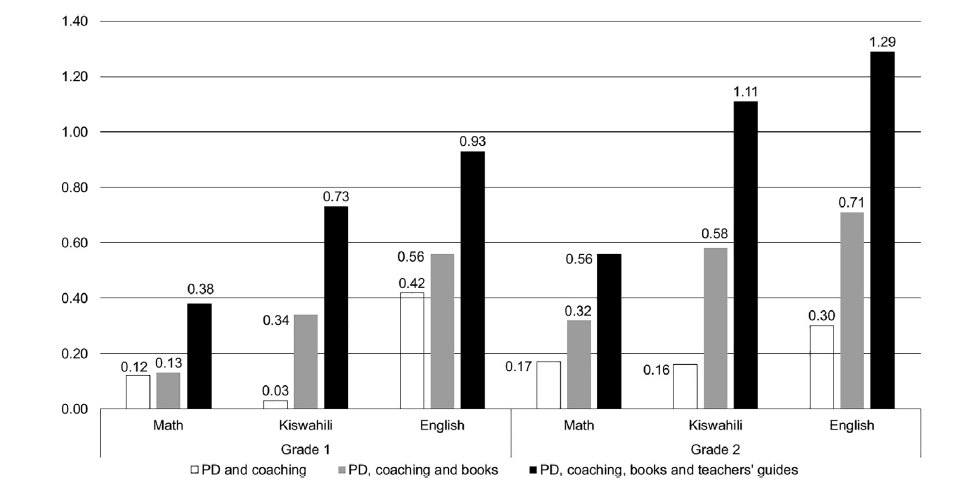

As captured in figure 1, the new materials produced significant improvements in learning, especially for literacy skills, and especially when supported with teacher guides.

Figure 1. PRIMR evaluation: Learning impacts of 3 different literacy strategies

Source: Piper et al. 2018

Because all treatment arms had been carefully costed, the government could conclude that an additional $100 in spending would produce 15 more students reaching the national literacy benchmarks each year under strategy 3, between six and eight more students under strategy 2, and two extra students under strategy 1.

Scaling up a successful pilot to a national program

PRIMR strategy 3 became the core design of Kenya’s new national literacy program, Tusome (Swahili for “Let’s Read”). In 2015, the Ministry of Education began a two-year effort to scale up the program to 23,000 public primary schools and 1,500 low-cost private schools. In 2017, an independent external evaluation found the program widely implemented and the share of students nationally meeting the government’s literacy benchmarks nearly doubled. These are impressive gains for a program scaled up nationally in just two years.

The second new paper by Piper and co-authors focused on the scale-up process, asking: when so many other programs (including in Kenya) have proven impossible to scale, how did the Kenyan government succeed in this case?

To answer, Piper and co-authors draw on the conceptual framework developed by researchers Luis Crouch and Joseph De Stefano on why it’s so hard to achieve system-wide reform in education. Crouch and de Stefano observe that system-level change requires getting decentralized schools and teachers to adopt new behaviors, and an education ministry’s ability to do this rests on its institutional capacity for three key functions:

-

Setting and communicating expectations for education outcomes

-

Monitoring and holding schools accountable for meeting expectations

-

Intervening to support students and schools when needed

Piper and co-authors show how Tusome’s design addressed, and strengthened, two of these core functions.

First, Tusome is organized around two clear outcome goals: national benchmarks for oral reading fluency (words per minute) in Kiswahili and English that have been communicated extensively through training and coaching programs. Outcome goals are also hardwired into the textbooks and teacher guides, which present a sequence of lessons geared towards achieving “emergent” and “fluent” reading at appropriate points.

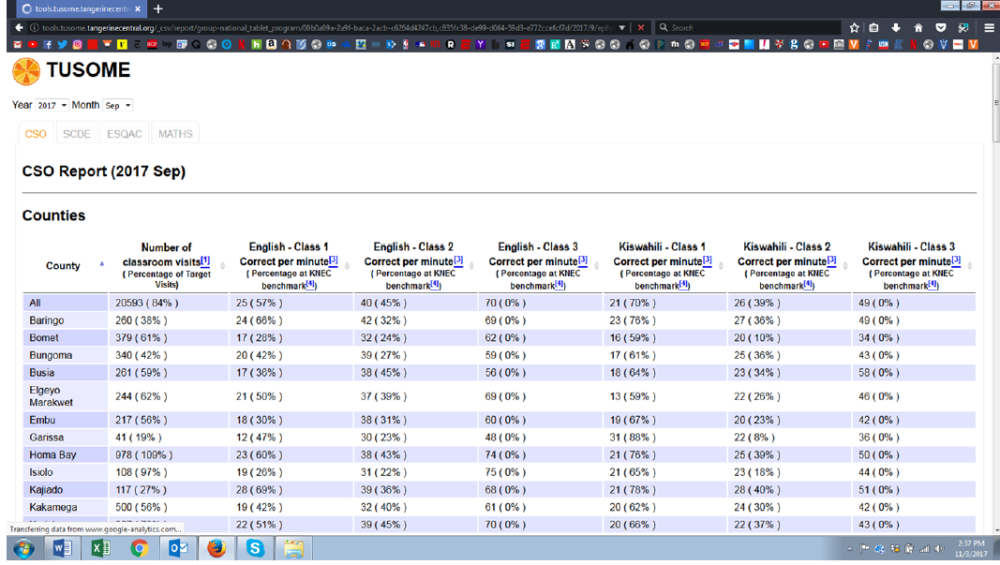

Second, school monitoring is also a key element of Tusome. Curriculum support officers make regular classroom visits using tablets with instructional support tools. For the first time, they are reimbursed for travel to schools, where they spot check students’ reading and observe teaching and provide feedback. They upload data on student reading progress and teacher practice, which allows district offices to generate an aggregate picture of their progress compared with other districts, as well as comparative data on their own schools, as shown below. This is a degree of classroom-level monitoring and data collection that is unprecedented in Kenya, and rarely seen anywhere.

Figure 2. Tusome monitoring data

The third element—targeted support—is the weakest part of the implementation so far. While the school system is for the first time generating real-time data exposing variations in performance at the school and district level, Piper and co-authors documented little action to target resources or support interventions to those that are struggling.

Piper and co-authors believe Tusome has transformed the “instructional core” of the first years of schooling. The program moves teachers to engage with their students in a new way, with new teaching techniques, new materials, and new expectations for learning outcomes. They also note Tusome’s focus on the classroom. Regular visits, structured observations, and feedback replace the isolation and performance vacuum found in most school systems at the classroom level. While there are no sanctions or incentives for teachers from the observations and feedback, they believe that the simple fact of classroom-level monitoring has reshaped the system’s norms and teachers’ felt accountability for performance.

Tusome is still only a few years old, and it will be important to watch how it is sustained, deepens, or fades over time. Big improvements in early-grade reading should lower grade repetition and improve learning outcomes as children move up through primary school; administrative data and Kenya’s third grade national assessment will help confirm that Tusome’s early effects are real and sustained. But through careful research on what works to improve reading, and how programs can be scaled successfully, Kenya and an enterprising group of researchers have made big contributions to our knowledge base—and will hopefully inspire other countries and research teams in the search for holy grail.

Disclaimer

CGD blog posts reflect the views of the authors, drawing on prior research and experience in their areas of expertise. CGD is a nonpartisan, independent organization and does not take institutional positions.